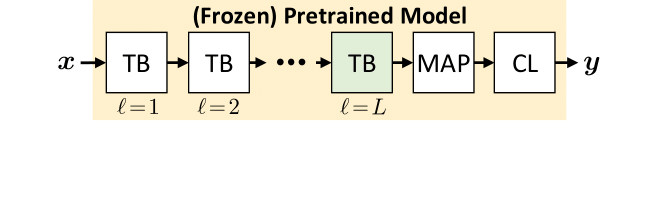

Method Overview

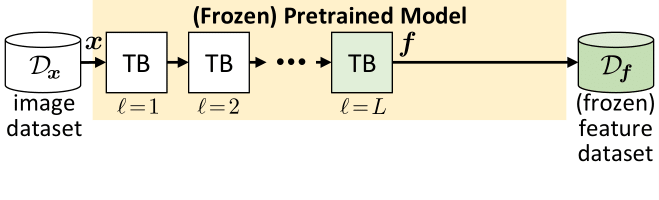

Given a (frozen) pretrained vision transformer, with L transformer blocks (TBs), a multi-head attention pooling (MAP) layer, and a classification layer (CL), we select its L-th Transformer block for caching.

Next, we feed images x to cache (frozen) features f.

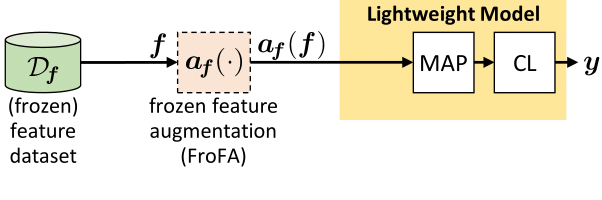

Finally, we use the cached features f to train a lightweight model on top. We investigate the effect of frozen feature augmentation (FroFA) applied on cached features in a few-shot setup. We use the same setup for all FroFA experiments .

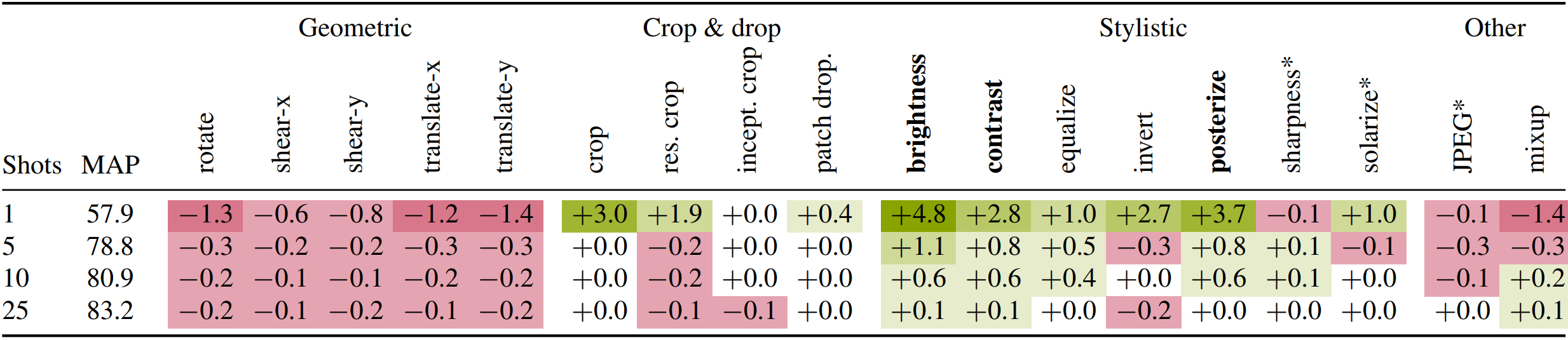

Investigating 18+2 Frozen Feature Augmentations (FroFAs)

We first investigate eighteen standard image augmentations in a frozen feature setup using an L/16 ViT pretrained on JFT-3B. In these experiments, we cache features on few-shotted ILSVRC-2012 and train a lightweight model on top of augmented frozen features. We identify three strong augmentations: brightness, contrast, and posterize. Results for two additional augmentations are provided in the supplementary.

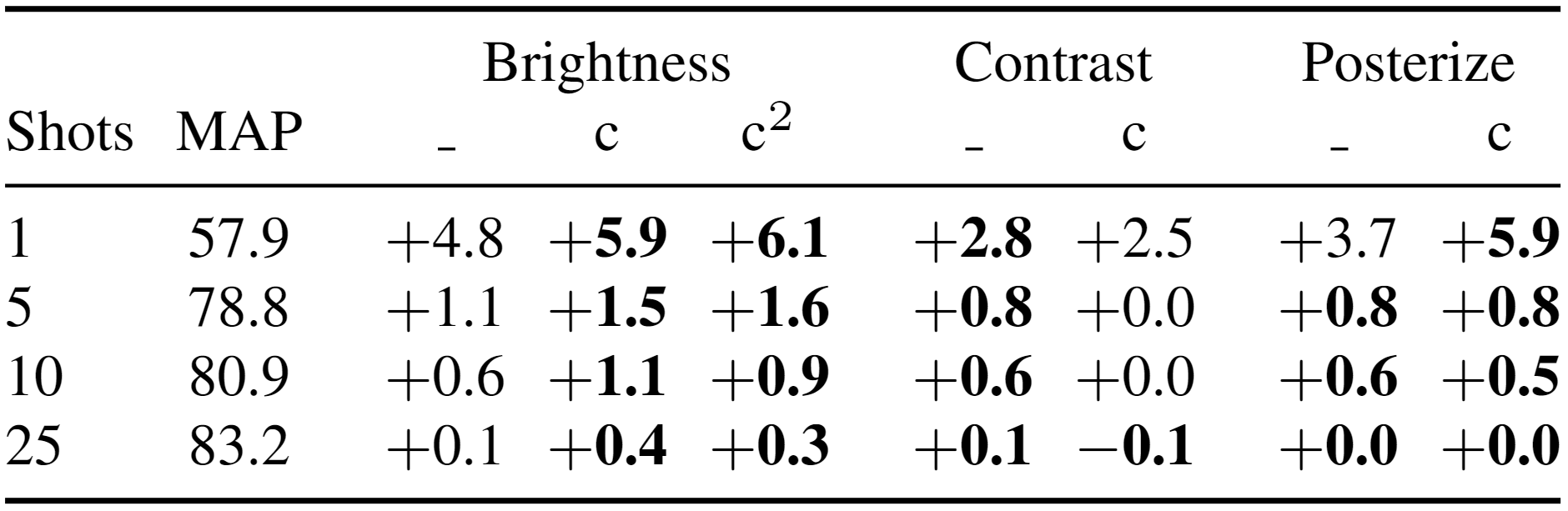

A Closer Look on Brightness, Contrast, and Posterize FroFAs

We apply brightness, contrast, and posterize in a feature-wise manor (c or c2). Again, we use an L/16 ViT pretrained on JFT-3B, cache features on few-shotted ILSVRC-2012, and train a lightweight model on top of augmented frozen features. We identify brightness c2FroFA as the best working FroFA.

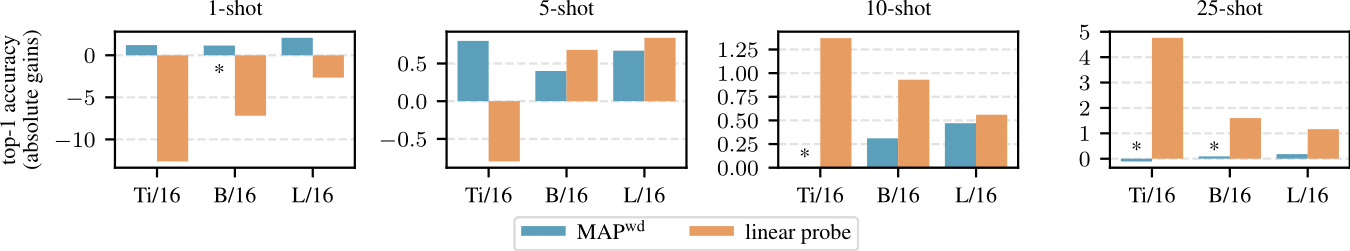

Our Best Working FroFA Yields Strong Performance Across Architectures, Pretraining Datasets, and Few-Shot Datasets

Average top-1 accuracy gains on few-shotted ILSVRC-2012, using frozen features from JFT-3B ViTs. We compare to a weight-decayed MAP (MAPwd) and L2-regularized linear probe baseline. Our method uses augmented frozen features while the baseline methods use unaltered frozen features. In almost all 5- to 25-shot settings, our method matches or surpasses both baselines.

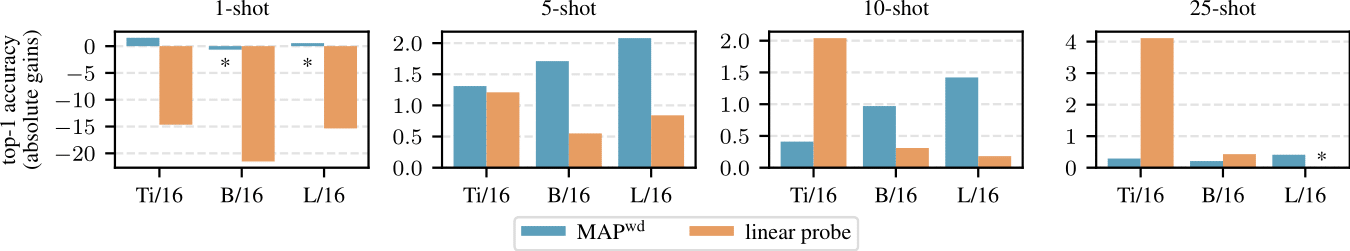

Average top-1 accuracy gains on few-shotted ILSVRC-2012, using frozen features from ImageNet-21k ViTs. We compare to a weight-decayed MAP (MAPwd) and L2-regularized linear probe baseline. Our method uses augmented frozen features while the baseline methods use unaltered frozen features. In all 5- to 25-shot settings, our method matches or surpasses both baselines.

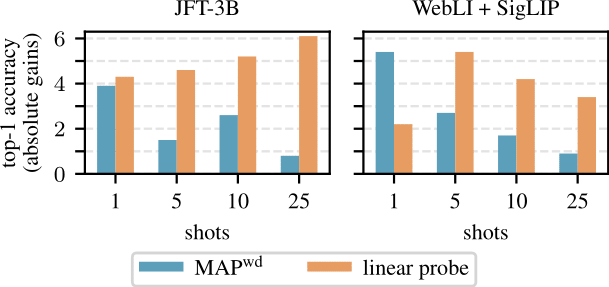

Average top-1 accuracy gains across seven few-shot test sets, including, CIFAR100 and SUN397. We train on frozen features from an L/16 model pretrained on JFT-3B or an L/16 image encoder of a WebLI-SigLIP pretrained vision-language model. Our method uses augmented frozen features while the baseline methods use unaltered frozen features. On average, our method shows superior performance across shots for both settings.

BibTeX

@InProceedings{Baer2024,

author = {Andreas B\"ar and Neil Houlsby and Mostafa Dehghani and Manoj Kumar},

booktitle = {Proc.\ of CVPR},

title = {{Frozen Feature Augmentation for Few-Shot Image Classification}},

month = jun,

year = {2024},

address = {Seattle, WA, USA},

pages = {16046--16057},

}